Preface

Recently, we learned about a project at DB Systel GmbH where they achieved 20x speedups in optimizing German train schedules and mitigating delays. Not only did they realize impressive performance improvements, but they also reduced costs by utilizing smaller compute instances, aligning with the company’s goal of lowering emissions. In this case study, Maren and her colleague Sebastian share their firsthand experiences migrating their workload to Polars.

About DB Systel GmbH

DB Systel GmbH provides digital solutions for the Deutsche Bahn AG Group, Germany’s national railway provider. They develop and assess new and evolving digital technologies and trends for making genuinely effective contributions to the overall rail system. DB Systel GmbH follows a cloud-first strategy and puts a strong focus on sustainability in and through digitalization. The company has more than 7,000 employees originating from 101 countries and has a higher-than-average percentage of female employees (25.7%) compared to the general German IT sector (approx. 17%). 1

In search for a fast alternative

Sebastian Folz and Maren Westermann work at the department “AI Factory” of DB Systel GmbH, a subsidiary of Deutsche Bahn AG. They use machine learning to optimize Germany’s train scheduling system to prevent overcapacities on train tracks, and consequently the build-up of delays. The data they work with encompasses Germany’s whole railway network, which is the largest railway network in Europe.

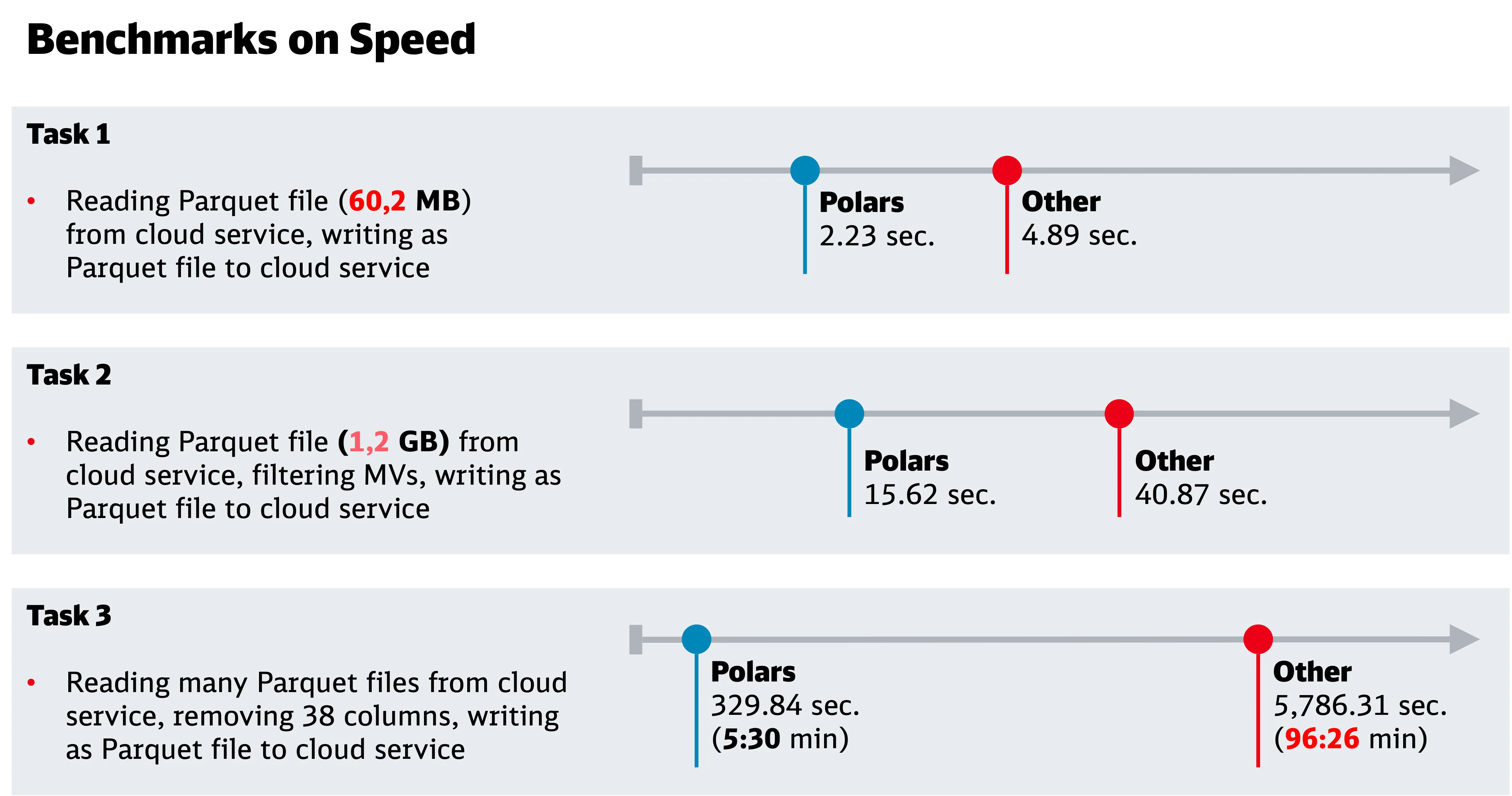

“It all started on a Friday afternoon during a busy week when I had about 90 minutes left until the weekend. I had to reprocess some data with a well-known Python framework after finding an error in the code. That code had just run for 96 minutes.” said Sebastian.

Instead of spending time waiting for the computation, he searched for an alternative framework, a faster one. He eventually ended up with Polars, the most promising candidate: easy to install, familiar coding patterns and written in Rust.

Sebastian managed to adapt the data transformation code to Polars and run it to completion on that Friday afternoon, in time for the weekend. The code itself ran in an amazing 5.5 minutes, down from the previous 96 minutes.

This unexpected success led to further investigation about Polars’ performance with a series of further experiments. At this time, Polars was still at version 0.20.24. The results indicated that it was reproducibly faster than the currently used framework.

Save on cloud computing due to memory efficiency

And it was not only faster: the experiments also covered an evaluation of memory consumption during data processing, which showed that Polars also needs way less memory than the other framework, thanks to its lazy operation mode. In contrast to eager computation, lazy evaluation avoids performing operations until the query is materialized.

In this case, execution was only performed when writing the processed data back to the disk. Also, the other framework made a copy of the full dataframe every time, depending on the performed operation, which Polars does not. This leads to better memory efficiency. This has further direct benefits for the company: the cloud computing instances become much smaller, which saves costs and improves the environmental balance, because less computing resources means fewer carbon dioxide emissions. A perfect match for the company’s strategic aspirations.

Everyday experience working with Polars

In our daily work, we see that the other framework easily encounters out-of-memory errors. This is not only very frustrating, but also very costly when you have to constantly re-engineer otherwise perfectly running code again to achieve better memory efficiency. Since we started working with Polars, we rarely encounter such problems, which makes daily work a lot easier.

Writing fast running code makes my inner child smile. It's like having a fast RC car. And if it's memory efficient too, that RC car will use a lot less batteries.

Sebastian Folz, Machine Learning Engineer @ DB Systel

Conclusion

Polars’ easily attainable benefits in speed, cost and environmental balance sparked great interest within the company. Other teams are now testing the library for their own use cases. We recognize clear advantages in using Polars and are eager to continue leveraging the state-of-the-art framework in future projects.

About the authors

Sebastian Folz is a passionate machine learning engineer who has contributed to open source projects in the past and recently discovered his passion for Polars. He can often be found at meet-ups around Python and machine learning. You can follow him on LinkedIn

Maren Westermann is a co-organiser of PyLadies Berlin, she is part of the contributor experience team at the open source project scikit-learn, and she is a contributor to Polars. You can follow her on LinkedIn or Mastodon.

Footnotes

-

Percentage of female employees in the IT sector (absolute and percentage values) for selected countries (sources for Female Tech Workforce EU Countries: Eurostat 2019a) Eurostat (2019a): available online at http://appsso.eurostat.ec.europa.eu/nui/submitViewTableAction.do (last update: 13 July 2021) ↩