It’s been a while since we last published benchmark results here. A lot of hard work has gone into optimizing our query engine since then, and other dataframe libraries such as pandas have also made great improvements. Time to see where we stand!

TPC-H benchmark

The TPC-H benchmark is a decision support benchmark. It contains a standardized way to generate data on various scales and a set of SQL queries to run. The queries include various operations such as joins, filters, and group-by operations.

By comparing the performance of various engines on each query, it’s easy to see the strong and weak points of each engine.

Adjusting for DataFrame front-ends

The original TPCH benchmark is intended for SQL databases and doesn’t allow modification of the SQL queries. We are trying to benchmark both SQL front-ends and DataFrame front-ends, so the original rules have to be modified a little. We believe that the SQL queries should be translated semantically to the idiomatic query of the host tool. To do this, we adhere to the following rules:

- It is not allowed to insert new operations, e.g. no pruning a table before a join.

- Every solution must provide one query per question independent of the data source.

- The solution must call its own API.

- It is allowed to declare the type of joins as this fits semantical reasoning in DataFrame APIs.

- A solution must choose a single engine/mode for all the queries. It is allowed to propose different solutions from the same vendor, e.g. (spark-sql, pyspark, polars-sql, polars-default, polars-streaming). However these solutions should run all the queries, showing their strengths and weaknesses. No cherry picking.

- Joins may not be reordered manually.

Setup

Our implementation of the benchmark is open source and available on GitHub.

Libraries

We benchmarked the latest versions of the following libraries:

The following configuration is relevant for the various libraries:

- For PySpark, driver memory and executor memory were set to

20gand10grespectively. - For Dask, the

threadsscheduler was used. - For pandas/Dask/Modin, PyArrow data types were enabled.

- For pandas/Modin, copy-on-write optimalizations were enabled.

- For Modin, the Ray backend was used and Modin memory was set to

90_000_000_000(90 GB).

Note that we did not include vaex in the benchmarks because it is no longer being maintained.

Common hardware/software

All queries were run under the following conditions:

- Machine: Google Cloud VM c3-highmem-22 (22 vCPUs / 178 GB memory)

- OS: Ubuntu 22.02 LTS x86-64

- Python version: 3.11.9

Scale factor

TPC-H allows for running queries at various data sizes. We chose scale factor 10, which translates to about 10 gigabytes of data. We think this represents the scale of data that is most relevant to Polars users.

Queries

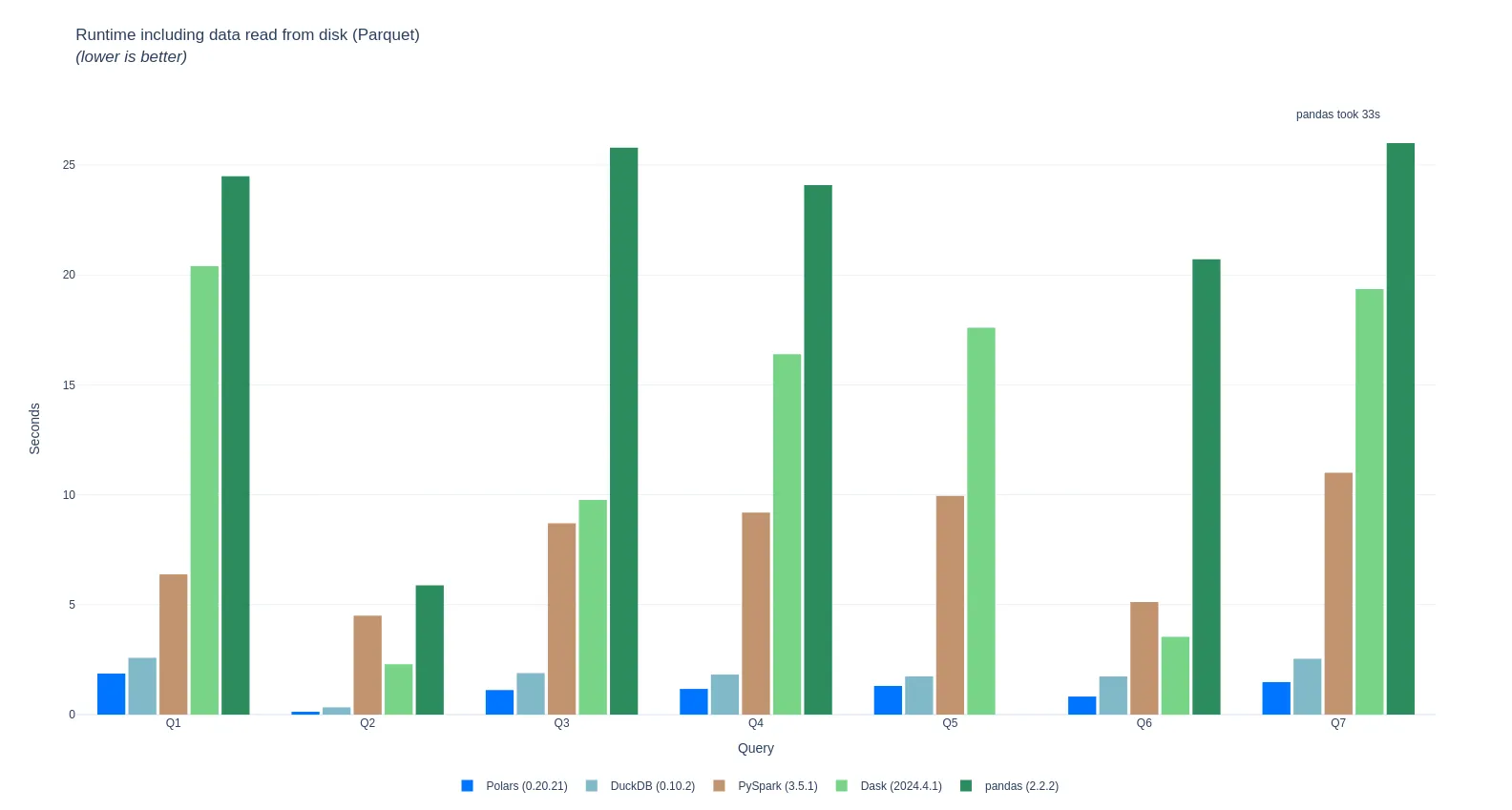

TPC-H offers a total of 22 different queries. We restrict ourselves to the first 7 queries right now, as we do not have pandas/Dask implementations of the other queries.

I/O

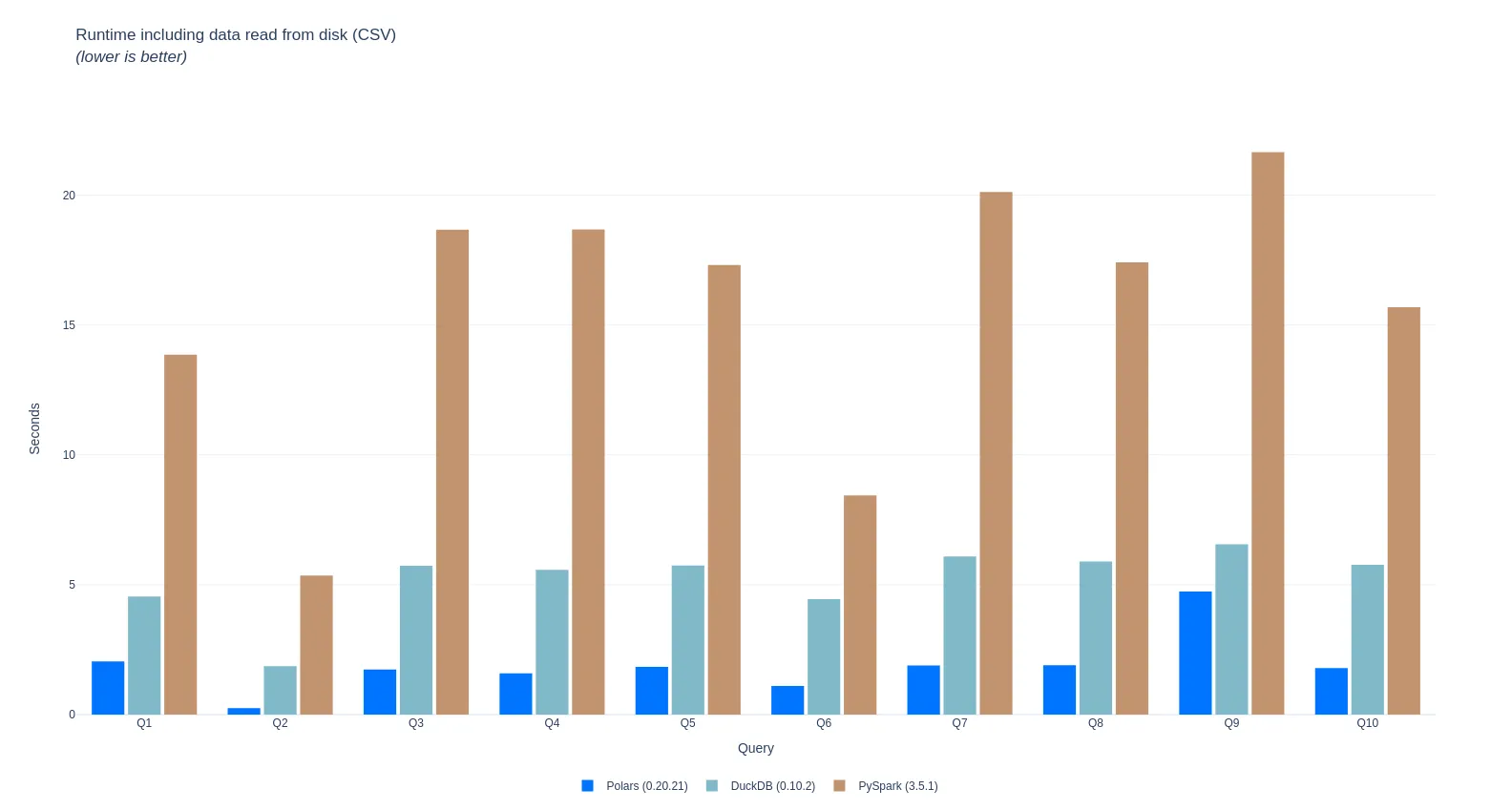

The data is stored on disk as Parquet files and CSV files. We run three versions of the experiments: one for each file type (Parquet/CSV) where reading the data from disk is included, and one where the data has already been read into memory.

Results

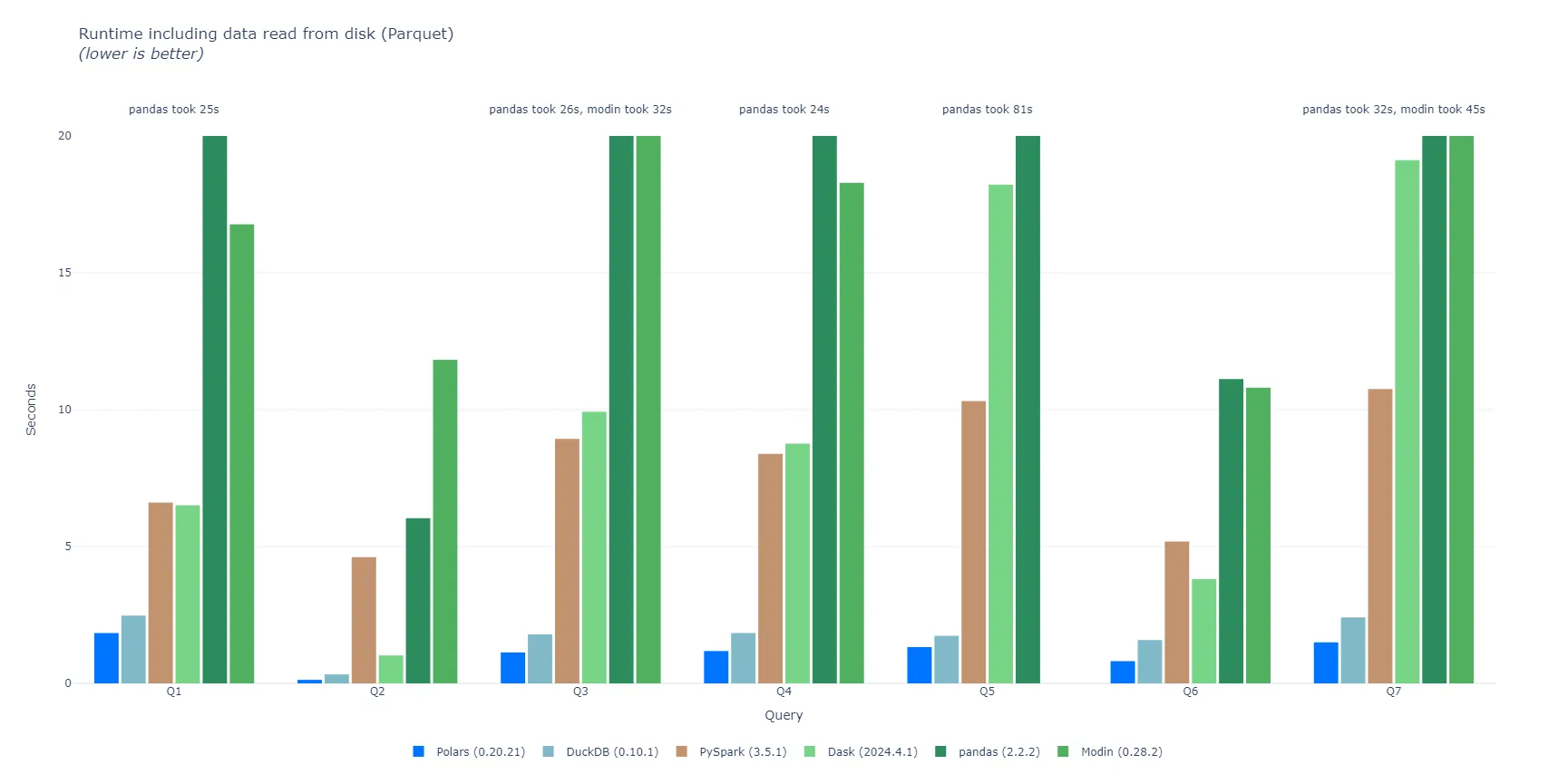

Including data read from disk (Parquet)

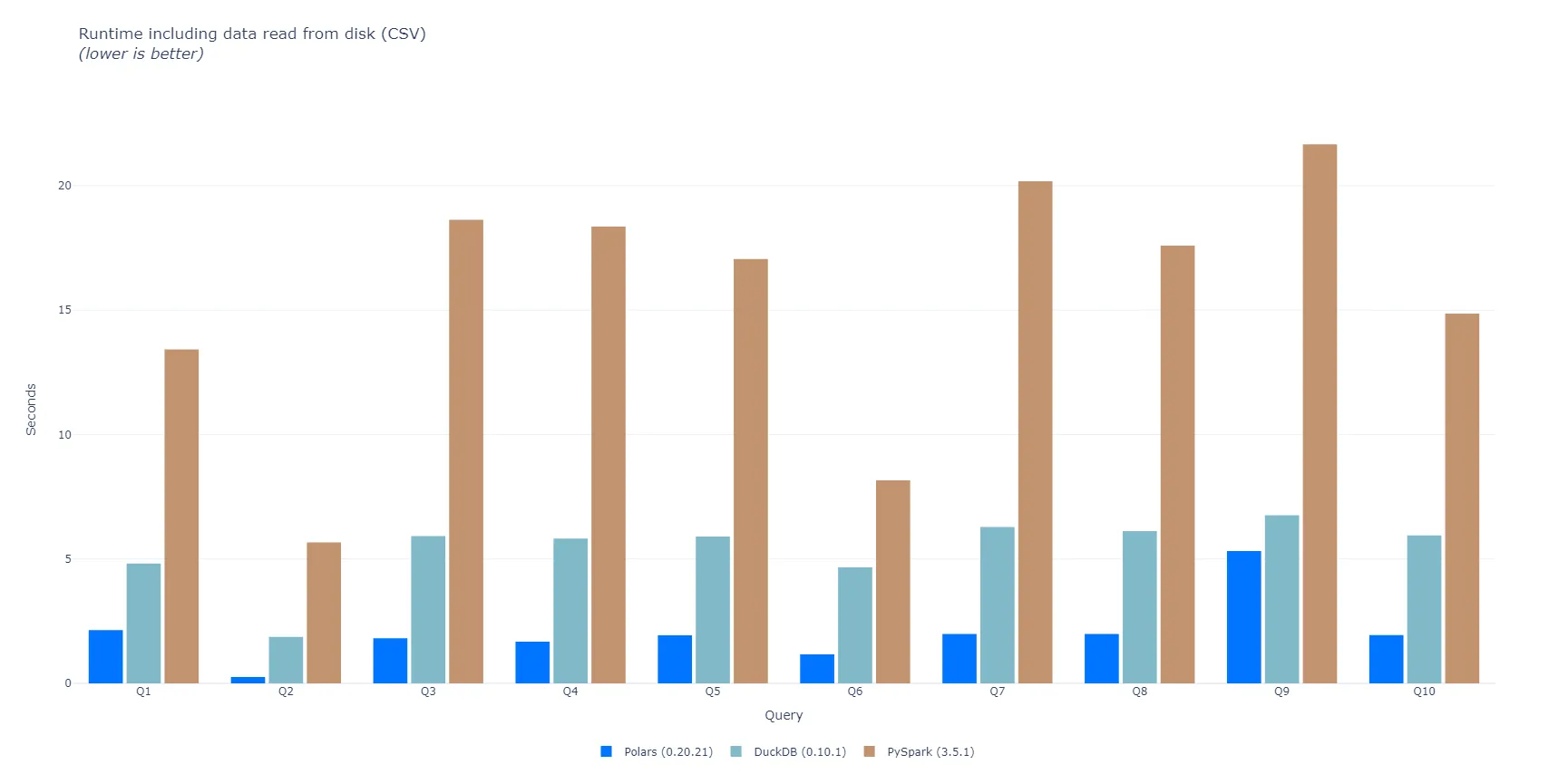

Including data read from disk (CSV)

Note that pandas/Dask/Modin were excluded here. pandas obviously does have a CSV reader, but we have not yet implemented a performant way to read CSV files with the correct pyarrow schema.

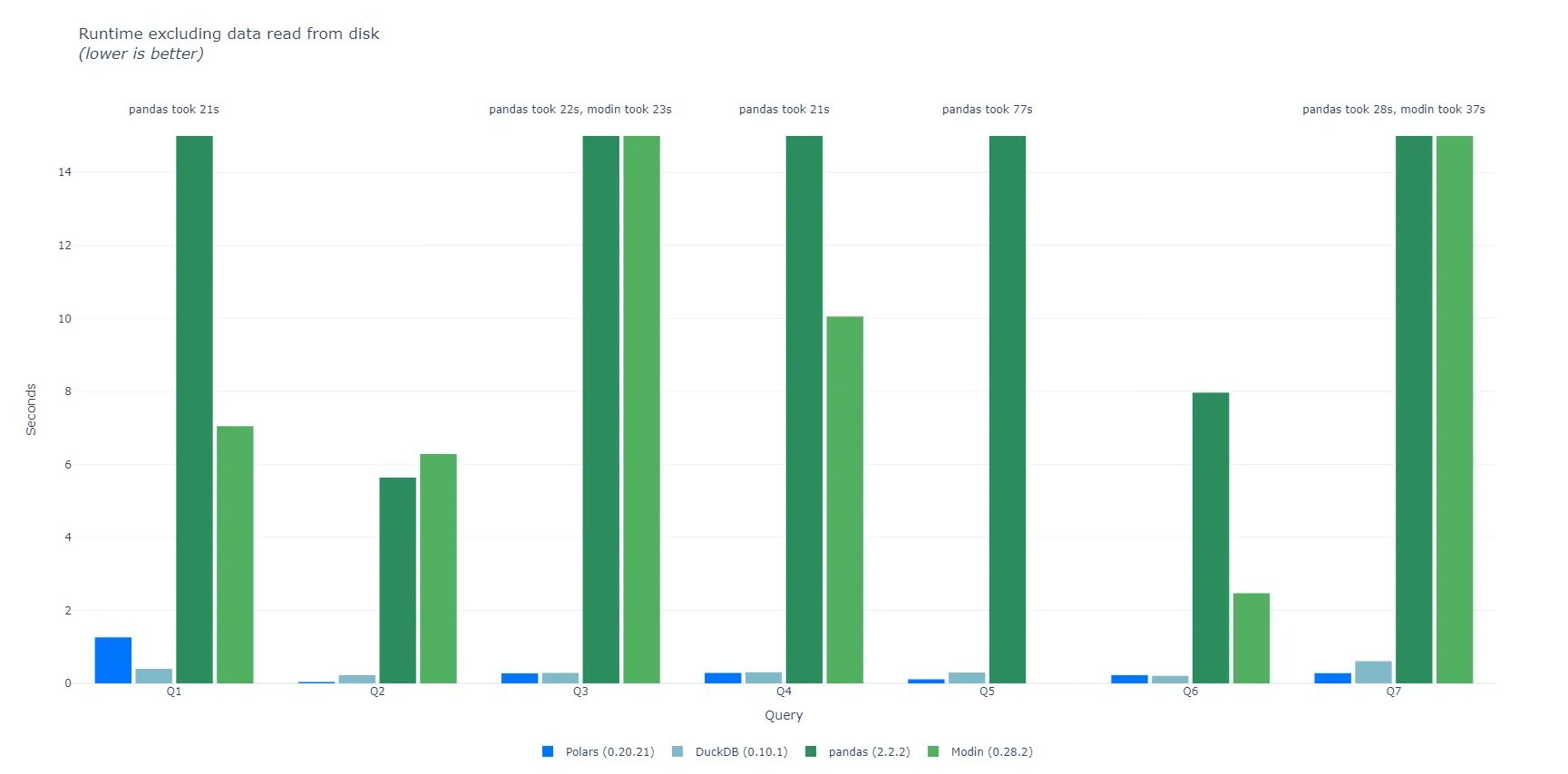

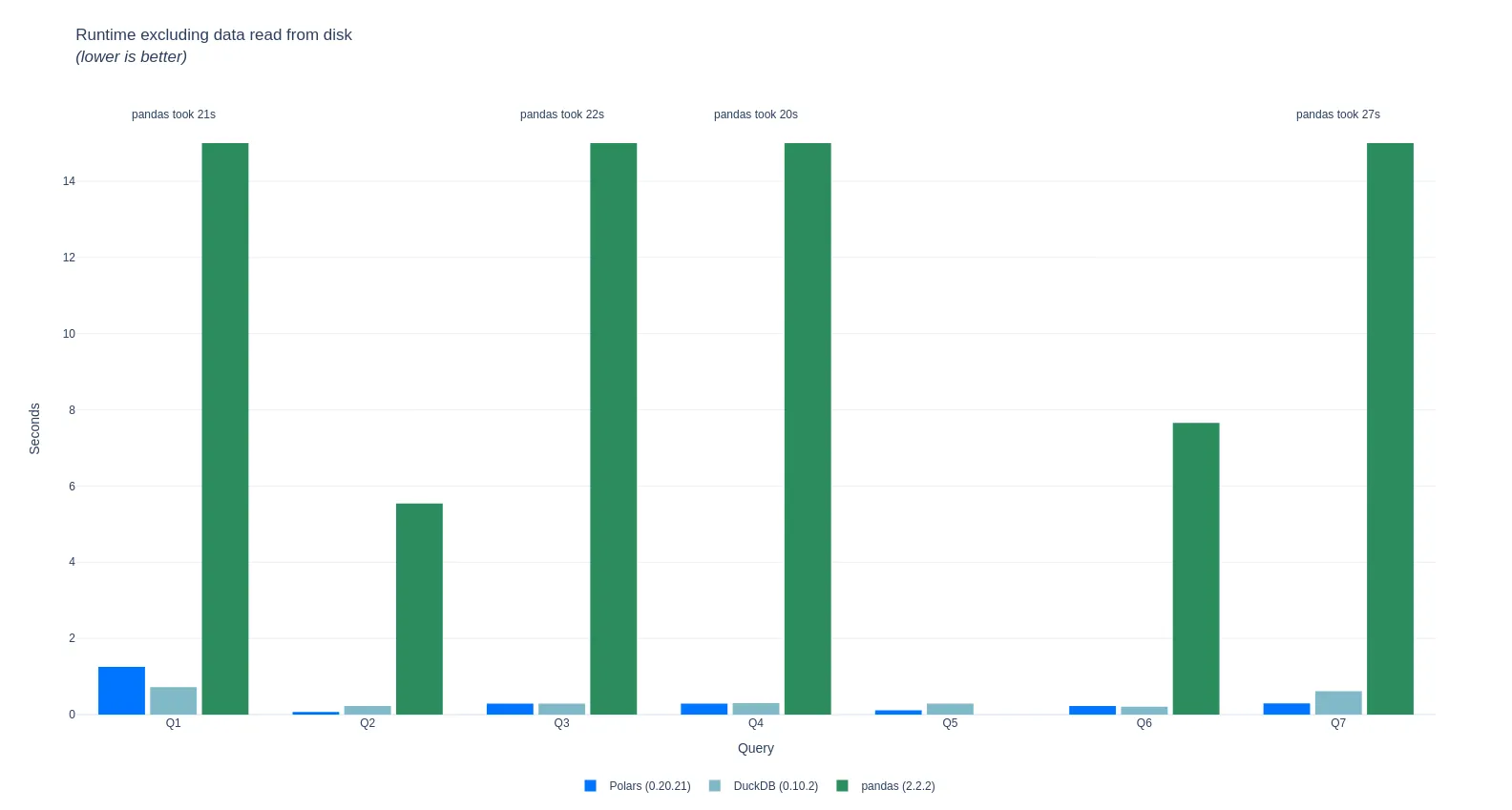

Excluding data read from disk

Note that Dask was excluded here, because it does not support loading Date types from a PyArrow-backed pandas dataframe.

PySpark was also excluded as we have not yet implemented a way to persist the data in memory before executing the query.

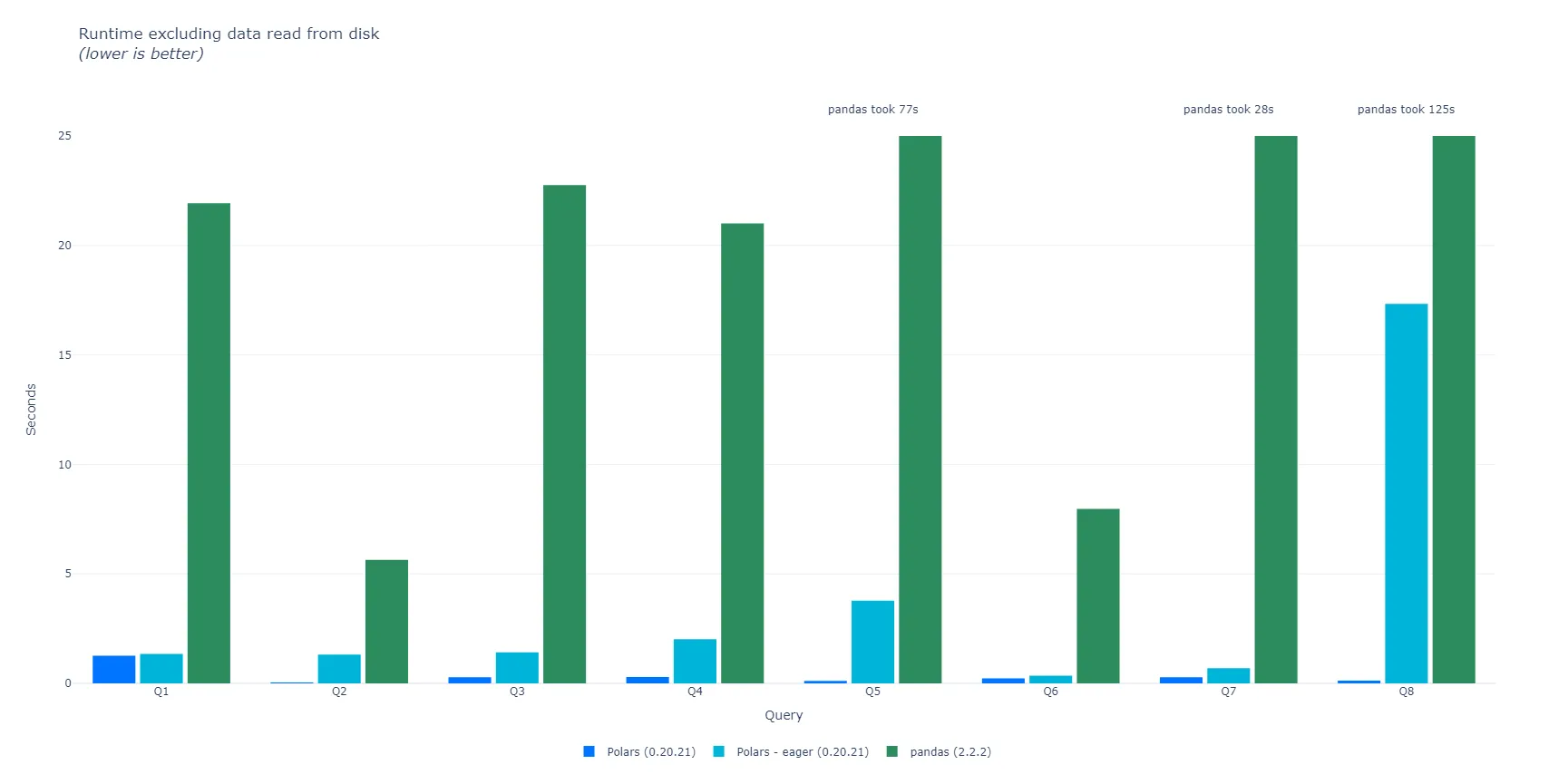

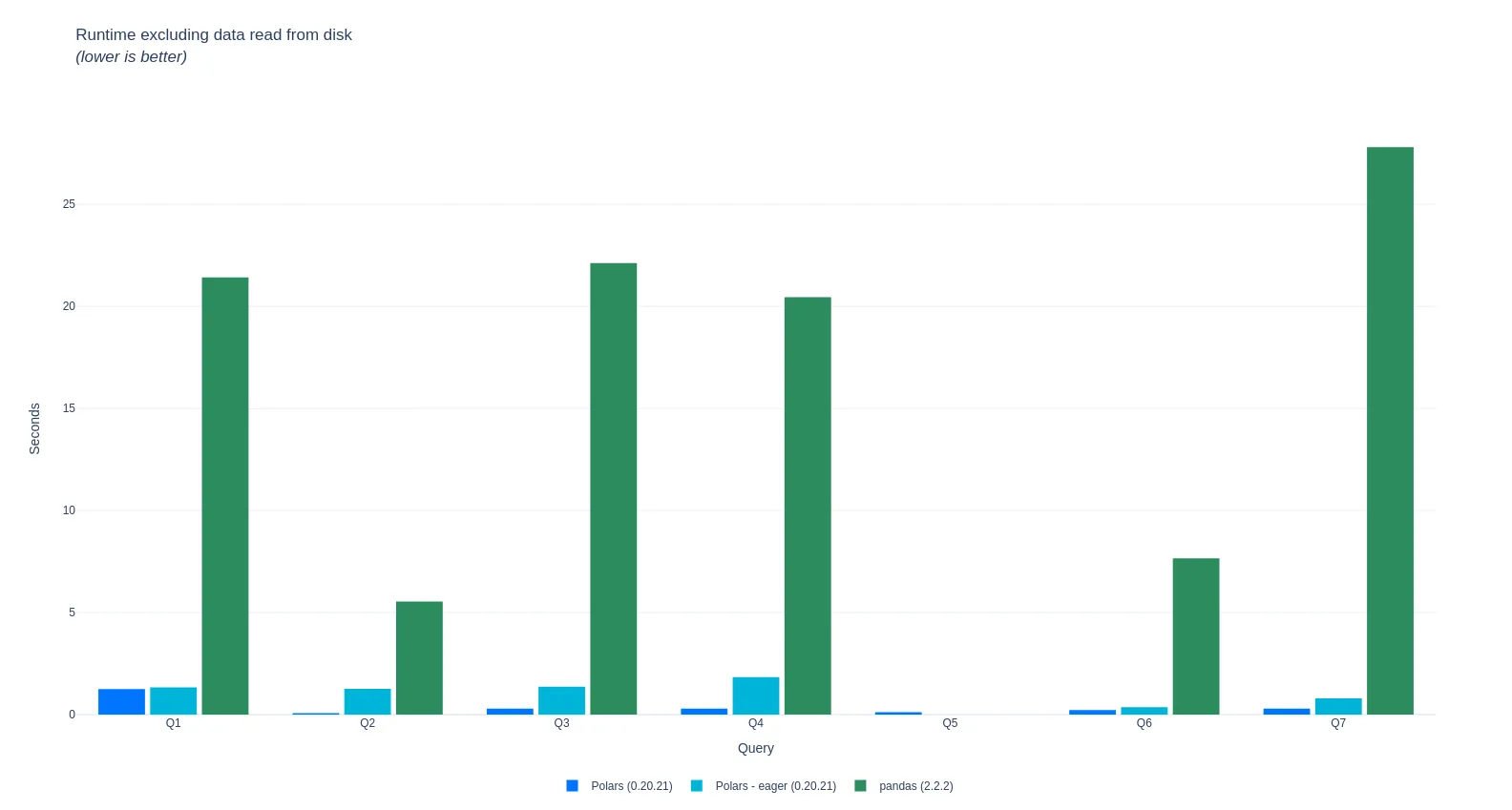

(Bonus) Polars without optimization

We thought it would be neat to show the performance of Polars with all query optimizations turned off. This allows for a more direct comparison with pandas, since pandas does not do query optimization. It also shows how powerful the Polars optimizer really is!

Takeaways

In terms of raw performance, Polars is the fastest among dataframe libraries. DuckDB, which is a pure SQL engine, has comparable performance.

pandas does not perform well on most queries. Modin is a mixed bag: it does better than pandas on some queries, and worse on others. It could not complete all queries due to various memory issues.

Dask manages to significantly improve on pandas’ performance on many queries, but does not match Polars or DuckDB. PySpark performance seems to be in the same ballpark as Dask.

We should note that PySpark and Dask are intended to run on distributed systems. This benchmark is run on a single machine, so it does not play to their strengths.

In closing

We’re happy to see that Polars is still ahead of the competition in terms of performance.

We intend to re-run these benchmarks a few times per year to track our progress. Stay tuned!

Addendum - Benchmarks on a smaller machine

We received feedback on this blog post that the machine used in the benchmarks may be too powerful. It has much more memory (176GB) than should be required for the task (processing ~10GB of data).

So we re-ran the benchmarks on a smaller machine: a c3-highcpu-22 with 22 vCPUs and 44GB of memory. This should be enough for the task and is pretty close to the pandas guidelines of having 5x the data size available as memory.

The results do not change significantly, with the exception that Modin was not able to complete any queries on this machine (MODIN_MEMORY set to 22GB).

Including data read from disk (Parquet)

pandas is not able to complete query 5 on this machine.

Including data read from disk (CSV)

Excluding data read from disk

Polars without optimization

Without optimization, Polars is also not able to complete query 5.